Self-Tuning Parameters of a Maglev Control System Based on Q-Learning

Abstract:

Maglev transportation, as an innovative mode of rail transit, is regarded as an ideal future transportation system due to its wide speed range, low noise, and strong climbing ability. However, the maglev control system faces challenges such as significant nonlinearity, open-loop instability, and multi-state coupling, leading to issues like insufficient tuning and susceptibility to environmental influences. This paper addresses these problems by investigating the self-tuning parameters of a maglev control system using Q-learning to achieve optimal parameter tuning and enhanced dynamic system performance. The study focuses on a basic levitation unit modeled after the simplified control system of an electromagnetic suspension (EMS) train. A Q-learning reinforcement learning environment and Q-learning agent were established for the levitation system, with a forward "anti-deadlock" reward function and discretization of the action space designed to facilitate reinforcement learning model training. Finally, a Q-learning-based method for self-tuning the parameters of the maglev control system is proposed. Simulation results in the Python environment demonstrate that this method outperforms the Linear Quadratic Regulator (LQR) control method, offering better control effects, improved robustness, and higher tracking accuracy under system parameter perturbations.

1. Introduction

The EMS-type maglev train [1], [2] achieves contactless support, guidance, traction, and partial braking through the electromagnetic attraction or repulsion generated by the interaction of coil currents. It also acquires electrical energy through magnetic induction without relying on a pantograph. This breaks the speed limit imposed by the traditional wheel-rail adhesion relationship, enabling higher speeds. At present, China has successfully completed the prototype and joint debugging of the 600 km/h high-speed maglev system [3], marking a new stage in the research of high-speed maglev transportation in China.

As an indispensable part of ensuring the stable operation of the EMS-type high-speed maglev train, the performance of the maglev control system directly determines the safety and comfort of the train [4]. Research on control systems and their algorithms began in the mid to late 20th century. In 2002, a composite control model combining PID with Fuzzy control is raised [5], which accomplishes PID parameter self-adjusting by employing Fuzzy reasoning, and shows great control effects. Cho and Kim [6] designed a fuzzy PID controller, which combines a traditional PID controller with a fuzzy controller. Based on the characteristics of system deviation, direction, and trend, decisions are made according to Teaching-Learning optimization to automatically adjust PID parameters. To compensate for the mismatch defect of PID control in nonlinear systems, Wang and Zhang [7] puts forward the theory of variable universe fuzzy PID algorithm-by selecting the right expansion factor to realize domain contraction, which can increase the fuzzy control rules, so as to improve the control precision. Additionally, in terms of explaining the PID control parameter adjustment rules for the maglev system, Ryan and Corless [8] proved that for a subclass of uncertain systems, a Maglev suspension control system, the zero state can be (a) rendered 'practically' stable (in the sense that, given any neighbourhood of the zero state, there exists a control, in the proposed class of continuous feedback controls, which guarantees global uniform ultimate boundedness with respect to that neighbourhood), or (b) rendered globally uniformly asymptotically stable by the proposed discontinuous feedback control.

Moreover, using modern control theory, scholars have proposed a series of nonlinear methods that can solve the stable suspension of the maglev system based on the premise of knowing the accurate model of the maglev system [9], [10], [11]. Tang and Yang [12] introduced a boundary variable power Fal function to replace the Sign function and associated the sliding mode gain and hyperplane function to eliminate system chattering instability in their improved sliding mode control algorithm based on Linear Extended State Observer (LESO). Hu et al. [13] improved the suspension performance of the system using Extended State Observer (ESO) technology combined with model reference sliding mode control and improved reaching law sliding mode control strategies. Additionally, this type of nonlinear control method can also improve the disturbance rejection performance of the maglev control system, ensuring suspension performance in the event of sensor failure. For example, Long et al. [14] propose designing Auto-Disturbance-Rejection (ADRC) control algorithm in train automatic operation system. From analyze of simulation of tracing the Automatic Train Operation curve, it shows that the this ADRC algorithm can meet the needs of maglev train automatic control, increasing the system's disturbance rejection.

In the era of intelligence, research in artificial intelligence has gradually penetrated the field of maglev control [15]. To reject the disturbance and parameter perturbations, an adaptive neural-fuzzy sliding mode controller is presented [16], which employs a sliding mode control, adaptive-fuzzy approximator, and the neural-fuzzy switching law, whose controller significantly reduces the impact of the disturbance and parameter perturbations ,showing good control system performance in simulations and experiments. Zhao et al. [17] used deep reinforcement learning methods, utilizing a double Q-learning scheme to reduce estimation errors in the Q function and used a PID controller to accelerate convergence, achieving suspension control and optimization based on a medium and low-speed maglev train dataset.

Therefore, for the maglev train suspension control system, the future development trend is bound to be towards high intelligence, excellent robustness, and good maintainability. Conducting adaptive reinforcement learning research on self-tuning control system parameters is of certain significance for consolidating maglev technology reserves and developing a new generation of highly intelligent high-speed maglev trains.

2. Levitation System Modeling

As shown in Figure 1, the topology of the maglev system of the EMS-type maglev train consists of four units: control unit, drive unit, motion unit, and feedback unit. These units can complete the levitation functions from lifting off to levitating and then to maintaining stability. A carriage of the EMS-type maglev train is composed of several levitation bogies, each of which consists of four basic levitation units, as shown in Figure 2. It is not difficult to find that there is consistency in functionality between the topological structure and the basic levitation unit, and the study of the basic levitation unit is representative to a certain extent [18].

Assumptions: Ignore the possible magnetic leakage in the electromagnet windings; the magnetic field generated by the electromagnet is uniform in the action area and conforms to infinite magnetic permeability; ignore adverse phenomena such as magnetic field saturation, hysteresis, and residual magnetism in electromagnetic phenomena:

where, $L(c, i)$ represents the inductance value corresponding to the determined coil current $i$ and the determined gap value $c$; $N$ is the number of turns of the electromagnet winding; $\varphi_T$ is the main pole magnetic flux; $A$ is the core area; $\mu$ is the vacuum permeability; $i(t)$ is the control current at time $t$; $c(t)$ represents the gap value at time $t$.

Then combining the inductance formula with the relationship between magnetic induction intensity and main pole magnetic flux, we have:

where, $F_e(i, c)$ represents the electromagnetic attraction corresponding to the determined coil current $i$ and the determined gap value $c$.

In the vertical direction, according to Newton's second law, the kinematic equation of the levitation electromagnet can be expressed as:

where, $m$ represents the weight allocated to a single levitation unit, including the weight of the vehicle body; $g$ represents the local gravitational acceleration; $f_d(t)$ represents the external force disturbance at time $t$.

At the equilibrium position, there is:

Ignoring the disturbance term and performing approximate linearization, we have the Taylor expansion at the equilibrium point, ignoring higher-order terms:

The open-loop transfer function of the entire system is:

Obviously, the characteristic equation of the system has a zero point on the right half-plane of s, making the system open-loop unstable and requiring external feedback control.

3. Design of the Levitation Control System Parameter Tuning Algorithm Based on Q-Learning

Reinforcement learning is a type of learning that originates from the field of artificial intelligence. Its core idea is to enable an agent to gradually learn how to make optimal or near-optimal decisions in a complex environment through a series of trial-and-error processes, guided by a specific reward function [16], [21]. Q-learning is a type of reinforcement learning that is based on the temporal difference method. By using the maximization of the subsequent state's Q value as the target value, it iteratively updates the Q function and ultimately converges to the optimal Q function to obtain the optimal policy [22]. The parameter training framework of the maglev control system based on reinforcement learning is shown in Figure 3.

$Q(U, a \mid s)$ represents the expected total sum of future cumulative reward functions obtained by executing all subsequent actions using policy $U$ after taking action $a$ under state $s$.

$V(U \mid s)$ represents the expected total sum of future cumulative reward functions obtained by executing a series of actions using policy $U$ under state $s$.

Naturally, we know from the Bellman equation that $Q(U, a \mid s)$ and $V(U \mid s)$ satisfy:

As previously mentioned, the Q-learning algorithm is a learning interaction process based on the optimal Q-function. Its update strategy can be derived from Eqs. (8) and (9):

In the equations, $\alpha$ represents the learning rate, which quantifies the impact of each learning iteration; $\gamma$ is the discount factor, which measures the importance of future rewards.

At the same time, the optimal Q-function $Q^*(a, s)$ also satisfies the Bellman equation, and its transformation relationship is:

In the equations, $k$ represents the number of iterations, and $s^{\prime}$ and $a^{\prime}$ represent the values of $S$ and $A$ at the next time step in the iteration.

For the single-point maglev model agent, its operating range must have definite upper and lower limits, including but not limited to the upper and lower limits of speed $c(t)$, the upper and lower limits of levitation gap $c(t)$, the upper limit of adjustment time $t_s$ (mechanism model time limit), and the upper and lower limits of control current $i$, etc.

The following values are specified:

Additionally, the action space for the self-tuning algorithm of levitation control parameters based on Q-learning meets:

The action space here cannot be simply explained as the values or changes of Kp, Ki, and Kd, but rather the sum of the default initial values and the changes. It is important to note that during the Q-learning process, there is an expected cumulative reward value for the reward function. To express this value, the action space in Q-learning must ignore the default initial value to estimate the impact of its change on the cumulative expectation of the reward function and the effect of increasing the change on the cumulative expectation of the reward function.

For the reward function, we cannot only calculate the expected gap value because we require the overall steady-state performance of the system. Therefore, the reward function $r(i, s)$ is expressed as follows. To avoid the "deadlock phenomenon" caused by negative reward functions, which may lead to poor convergence during training, a positive reward function is adopted here. It uses an exponential form to expand the positive reward function [14], reducing or avoiding fatigue phenomena in reinforcement learning.

where, $O_c$ represents the overshoot value under a set of control parameters. $t_s$ is the adjustment time. $w$ is a positive coefficient, an important parameter for designing the positive function; $k_c, k_s$, and $k_o$ are the reward coefficients for the error value, adjustment time, and steady-state error, respectively. Among them, $k_o$ is a value less than 0 because the smaller the overshoot, the better the steady-state performance.

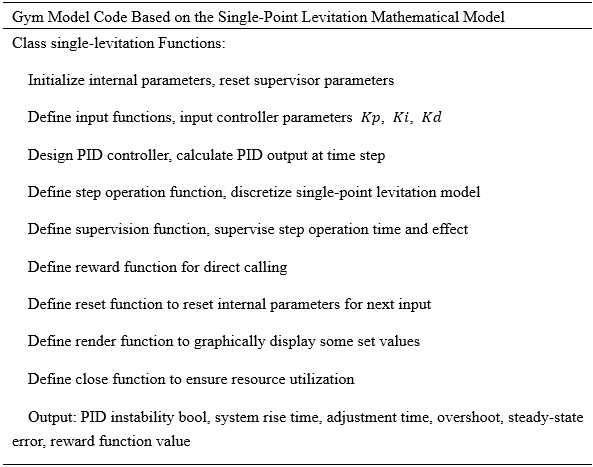

The Python mechanism modeling of the single-point maglev control system should be divided into the following steps:

(1) Construct a PID controller (simulator). When given the controller parameters (Kp, Ki, Kd) and the system's previous action (speed, position information), the PID's calculation output value can be fed back under this set of parameters.

(2) Construct a numerical feedback model in Python for the single-point maglev mechanism as a single environment step. After obtaining the PID calculation output value at this time, complete the output feedback of the single-point maglev mathematical system and output the system's next action information (speed, position information).

(3) Construct a monitor and termination program with certain monitoring capabilities. Under stable system conditions, output the steady-state indicators of the single-point levitation system mechanism model under given controller parameters, including rise time, adjustment time, overshoot, and steady-state error. Meanwhile, under unstable conditions, output a calibration condition to prevent the system from encountering singularities during reinforcement learning.

For convenience in subsequent research and development and standardizing the code, the Gym model framework based on neural learning cases was referenced when modeling the single-point levitation model. The code implementation is shown in the above figure.

The construction of the Gym environment is mainly based on Eq. (5). Given the system parameters, based on the transfer function relationship between the control quantity (current) and the controlled quantity (gap), Python solver tools simulate the environment feedback during a given single environment duration. It provides real-time transient information such as gap value and speed and dynamic performance information like system stability indicators, adjustment time, and steady-state error. The Q-learning system in this paper mainly uses dynamic performance information for training and simulation.

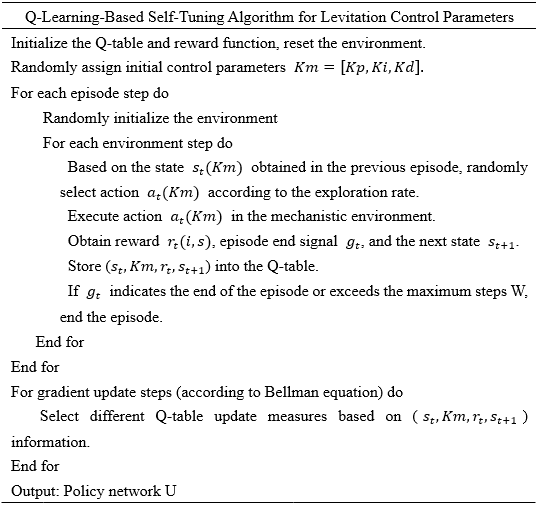

To implement the model functions, in addition to the single-point levitation mechanism model, it is necessary to design a training algorithm for the maglev PID control model based on Q-learning. The code is shown in the above figure. It should be noted that there is an exploration rate in the Q-learning algorithm, which was not mentioned earlier. This is because even with a positive reward function to prevent deadlock, the input-output relationship between the system and the reward function and the Q function cannot be purely linear, so “potential traps” exist. The reward function setting cannot fully guarantee crossing these “potential traps”, where a certain non-optimal control parameter's Q value and the corresponding Q values within the positive and negative action range during Q-learning may both be greater. However, this does not indicate that the control parameter is optimal. The existence of the exploration rate effectively solves the problem of “potential traps”.

4. Simulation Results Validation and Analysis

To validate the performance of the Q-learning-based tuning levitation control system, this method was compared with the traditional LQR method for control performance. The Q-learning algorithm and the traditional LQR method were compared under conditions of initial startup to stable levitation and system load change.

Key parameters in the Q-learning model include:

Total environment training update steps: 100000 times, maximum steps per episode: W=1200. Discrete PID sampling time: 0.001 s, discretization coefficient of control parameters: n=500.

Due to the program design, the total training steps in the environment cannot be infinitely large, so the steps were derived based on preliminary simulation and estimation.

The parameters used for comparison with LQR control are $Q=\left[\begin{array}{ccc}1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 150\end{array}\right]$, $R=4$, step time: 0.001 s. Here, the parameter $Q$ represents the state weighting matrix, where its value can relatively reflect the speed at which the LQR control system's state approaches the target value; $R$ is the control weighting matrix, where the larger the value, the slower the system's state decays.

Additionally, the basic physical quantities referenced in the algorithm are shown in Table 1.

| Parameter | Unit | Symbol | Reference Value |

|---|---|---|---|

| Single track load mass | Kg | M | 750 |

| Coil turns | - | N | 270 |

| Target gap | m | $c_0$ | 0.008 |

| Electromagnet area | $m^2$ | A | 0.115 |

| Vacuum permeability | H/m | $\mu$ | $4\pi \times 10^{-7}$ |

| Equilibrium current | A | $i_0$ | 19.1 |

(1) Steady-state levitation conditions

Here, we only considered the first 20,000 environment update steps. The variation images of the Action (i.e., coefficients in Km) are shown in Figure 4. It can be observed that at approximately 9,800 steps and 8,000 steps, Kp and Ki have both reached a nearly stable value, namely:

Additionally, it should be noted that the Kd parameter was not considered in Q-learning and was set to 0 by default.

(2) Simulation Training results under system load variation

For changes in system load, we modify the average load M to M'=820 kg as described above. After training the Q-learning reinforcement learning model, the output values of Km' are shown in Figure 5.

It can be seen that due to the change in system load, the environment stabilization steps increase. At approximately 17,500 steps and 13,000 steps, Kp' and Ki' have both reached a stable learning value:

(1) Static levitation

Figure 6 presents the gap values and velocity (gap derivative) values for Q-learning.

It is evident that the Q-learning PID achieves a steady-state adjustment time t of 0.17 s, compared to the 0.9 s adjustment time for LQR control, representing an improvement rate of 81.1%. Moreover, the slope of the rise curves for the two control systems indicates that the Q-learning PID responds much faster than the LQR control system, which is also visually reflected in the peak sizes of b.

It is clear from the figure that the Q-learning strategy is more effective and reliable. The control parameters reflected by the Q-learning PID control strategy show more efficient characteristics in steady-state levitation. Under the condition of satisfying control size constraints, the control current effect is utilized to achieve faster levitation and stabilization.

(2) System load variation

During the operation of magnetic levitation trains, the load on the bogie changes continuously due to the boarding and alighting of passengers. Figure 7 compares the levitation performance of Q-learning PID and LQR control when the system load changes from 750 kg to 820 kg.

It can be observed that when the system load changes, the LQR control method experiences a decrease in control capability, with an increased rise time and a significantly smaller curve slope compared to the slope of the curve obtained after retraining the Q-learning reinforcement learning model.

From the information in Figure 7, it is evident that due to the lack of reconfiguration of LQR system parameters, the adjustment time increases significantly from 0.9 s to approximately 1.2 s, representing a growth rate of 33.3%. This indicates that when using an LQR controller, adjustments to the QR values are necessary during system load changes. For the Q-learning PID control algorithm, the adjustment time only increases from 0.17 s to approximately 0.20 s after load changes. The system control parameters exhibit strong robustness and adaptability to system parameter perturbations.

(3) System parameter perturbations

During operation, magnetic levitation trains might encounter vertical perturbing forces when passing through tunnels and other environments. Figure 8 compares the system performance of Q-learning PID control and LQR control under an external perturbing force of 1 $\mathrm{m} / \mathrm{s}^2$.

In subgraph (a) of Figure 8, it can be seen that LQR control results in a significant gap variation when a constant external force F is applied, changing from 0.008 m to 0.020 m, which exceeds the control limit range. Furthermore, LQR control cannot correct the gap error, which remains at 0.020 m, indicating an imbalanced condition. In contrast, the Q-learning PID system performance is significantly better than LQR, with only about 0.7 mm of error.

This indicates that under the same external force, the effect of acceleration changes due to Q-learning control parameters is much smaller compared to LQR control.

In summary, the Q-learning PID control method demonstrates better robustness and faster response speed compared to LQR control. For situations involving external load changes or external forces, which result in significant perturbations to system parameters, the Q-learning-based magnetic levitation system parameter self-tuning PID control exhibits superior dynamic performance, robustness, and adaptability compared to LQR control.

5. Conclusion

For the problem of parameter tuning in magnetic levitation control systems and the associated issues, a self-tuning method based on reinforcement learning Q-learning was proposed. A standardized Gym reinforcement learning environment for the basic levitation unit was established, a forward "anti-deadlock" reward function was set, and a Q-learning algorithm path was used to find the optimal Q function. A Q-learning algorithm for self-tuning magnetic levitation control system parameters under discretized action space of control parameters was designed.

Compared to traditional LQR control methods, the proposed Q-learning PID control demonstrated superior performance. The steady-state levitation time for Q-learning PID control is only 18.9% of that for LQR control; during system load changes, the rise time for Q-learning PID control is 29.8% of that for LQR control; and under external perturbations, the steady-state error of Q-learning PID control is only 5.8% of that for LQR control. This confirms that the Q-learning-based magnetic levitation control system parameter self-tuning research can achieve optimization of control system parameters and dynamic performance. It provides the magnetic levitation control system with excellent parameter tuning capability, improved interference resistance, and robustness.

As the current Q-learning magnetic levitation system control parameter self-tuning algorithm is a simplified model and does not consider coupling situations or the self-tuning of Kd parameters, further detailed research will be conducted in the next phase to address these issues and achieve more outstanding self-tuning of magnetic levitation system control parameters.

The data used to support the findings of this study are available from the author upon request.

The author declares no conflicts of interest regarding this work.